Changing the incentive structure of social media to reduce threat and negativity

Our evolutionary instincts are wired to detect threat and social media often exploits these instincts

Over thousands of years, evolution has left us with brains that are highly attuned to potential threats in our environment. These threats range from physical dangers like venomous snakes to social threats like someone in our community who seems angry. Our brains also react strongly to things that might harm our social groups or challenge our moral values, like observing something unfair, offensive, or disgusting. These reactions help us stay safe by alerting us to potential harm.

Alas, our brains occasionally mistake something harmless for a real threat. This is what scientists call a “proxy failure.” A “proxy” here means a stand-in—our brain uses negative or dangerous stimuli as a signal to draw our attention to something that might be important or dangerous, even when it’s not.

Our latest paper explains why this poses a problem on social media, which is built to show us things that grab our attention. Platforms like Facebook, Instagram, or TikTok use engagement based algorithms that are designed to keep us online as long as possible. These algorithms assume that if content receives a lot of likes, clicks, or comments, it must be people want to see.

But because our brains naturally pay more attention to threats or negative content, the algorithms that decide what we see end up promoting more of that kind of content—outrage, division, and fear. It also incentivizes social media users and content creators to “hack” our brain’s attention system by producing negative and attention grabbing content.

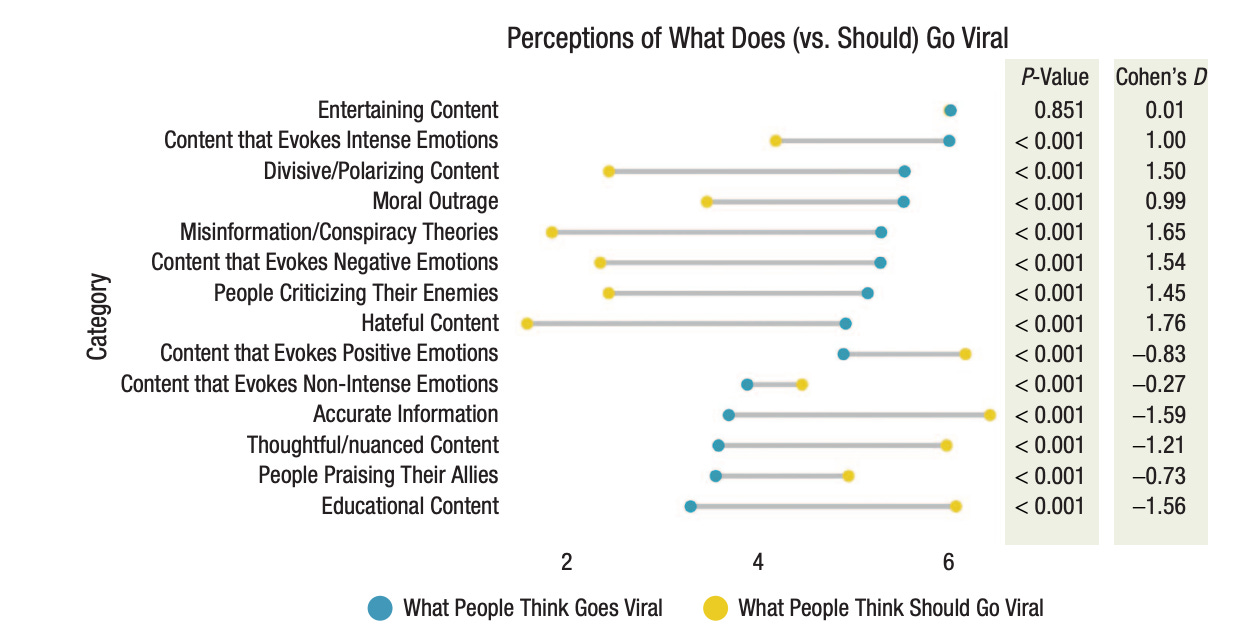

Importantly, most people don’t actually want this kind of content in their feeds. Research from our lab finds that while people realize that false, angry, or hateful content tends to go viral, they’d prefer to see posts that are true, positive, or informative (see figure below). The problem is that social media platforms rely on engagement—how much time we spend looking at something or interacting with it—as a shortcut for what we like. But this shortcut (another kind of proxy) doesn’t always reflect what we truly value, promoting false, negative or hostile content.

There’s some good news: small changes to how social media works can make a big difference. For example, when platforms change the incentives and reward people for posting accurate information, users are much more likely to share true information. Members of our lab have found that Even adding simple buttons like “trust”, “distrust”, or “a misleading button” can help people think more critically about what they see and make better choices about what to share.

Right now, many algorithms focus on “watch time” or how long people look at a post. Because negativity drives online information consumption, this system ends up spreading more harmful content. But if platforms gave people better tools to control their own feeds—like being able to easily say “I don’t want to see posts like this”—then users could shape their experience more intentionally, and reduce their exposure to unwanted threatening stimuli on social media.

Some of these tools already exist, but they’re hard to find or buried in settings menus. If platforms made these tools easier to use and more visible, it could help reduce exposure to harmful or upsetting content. Our research suggests that changing the incentives on social media platforms could help align them with the preferences of users and help slow the flood of hostile and negative content.

In the end, whether these changes happen depends on what social media companies care about most. If their main goal is making money, they’ll likely keep using systems that focus on engagement and sensationalism. But if they truly want to improve people’s experiences and support a healthier society, they should prioritize content that helps users feel informed, connected, and safe—even if it means people spend a little less time scrolling.

Citation: Robertson, C. E., del Rosario, K., Rathje, S., & Van Bavel, J. J. (2024). Changing the incentive structure of social media may reduce online proxy failure and proliferation of negativity. Behavioral and Brain Sciences, 47, e81. doi:10.1017/S0140525X23002935

News and Announcements

Congratulations to lab affiliate Kareena del Rosario for receiving the 2025 Stuart Cook Award! Kareena does research at the intersection of emotion and morality and will be defending her PhD later this month before starting her Postdoc. The Stuart Cook Award is given annually to the top PhD student in social psychology, as voted by faculty. Kareena joins several several former lab members who have won the award, include Claire Robertson who won it last year.

Claire Robertson received an Honorable Mention for the 2025 Psychology of Technology Network Dissertation Award for her thesis on “Understanding the Causes and Effects of Online Extremity”! The Psychology of Technology Institute hosts an annual dissertation award program for doctoral students around the world to help them gain support and visibility for their work. Congrats to Claire who will be starting a Professor position at Colby College in the fall where she will be leading The Extremism and Polarization Lab!

Speaking of technology, Jay gave a talk this week at NYU Langone Medical School as part of a conference on the health implications of social media. His discussed the potential health risks of disconnecting with real people and replacing it with social media (along with other health risks of social media, like the spread of misinformation). This is an issue that public health experts are taking very seriously and it was great to bring experts from multiple fields together to share insights.

This newsletter was written by Sarah Mughal and edited by Jay Van Bavel.

If you have any photos, news, or research you’d like to have included in this newsletter, please reach out to our Lab Manager Sarah (nyu.vanbavel.lab@gmail.com) who puts together our monthly newsletter. We encourage former lab members and collaborators to share exciting career updates or job opportunities—we’d love to hear what you’re up to and help sustain a flourishing lab community. Please also drop comments below about anything you like about the newsletter or would like us to add.

And in case you missed it, here’s our last newsletter:

That’s it for this month, folks - thanks for reading, and we’ll see you next month!